We have already written few articles about web scraping using beautifulsoup and requests in python. This is yet another article where we will scrape news headlines from a news website.

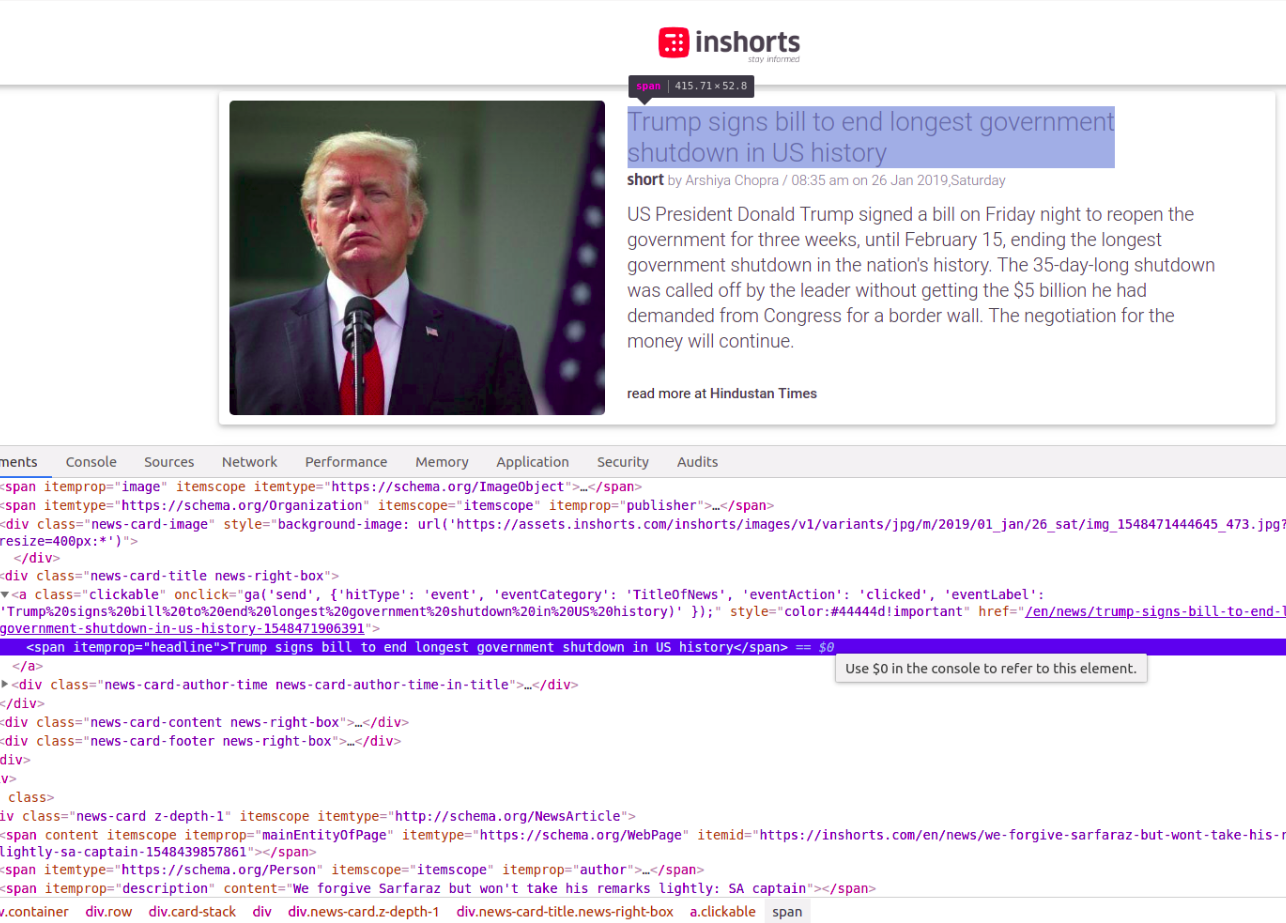

For this article we have chosen the website inshorts.com. Lets start reading the news from their homepage https://inshorts.com/en/read/. To scrape headlines, we need to inspect the headline html element.

As we can see all the headlines are inside a span html tag with attribute name itemprop and its value as headline. In beautifulsoup, we can find all elements with given attribute value using the method find_all(attrs={"attribute_name": "attribute_value"}) .

Before starting, we strongly recommend to create a virtual environment and install below dependencies in it.

beautifulsoup4==4.6.0 lxml==4.3.0 requests==2.21.0

Scrape news website's homepage:

Lets start by getting the response from the homepage url.

url = 'https://inshorts.com/en/read' response = requests.get(url)

Create a seperate function to print headlines from the response text. This will be helpful later on as well (Remember DRY principal).

def print_headlines(response_text):

soup = BeautifulSoup(response_text, 'lxml')

headlines = soup.find_all(attrs={"itemprop": "headline"})

for headline in headlines:

print(headline.text)Call print_headlines function and pass response.text to it.

url = 'https://inshorts.com/en/read' response = requests.get(url) print_headlines(response.text)

Code so far would be

import requests

from bs4 import BeautifulSoup

import json

def print_headlines(response_text):

soup = BeautifulSoup(response_text, 'lxml')

headlines = soup.find_all(attrs={"itemprop": "headline"})

for headline in headlines:

print(headline.text)

url = 'https://inshorts.com/en/read'

response = requests.get(url)

print_headlines(response.text)

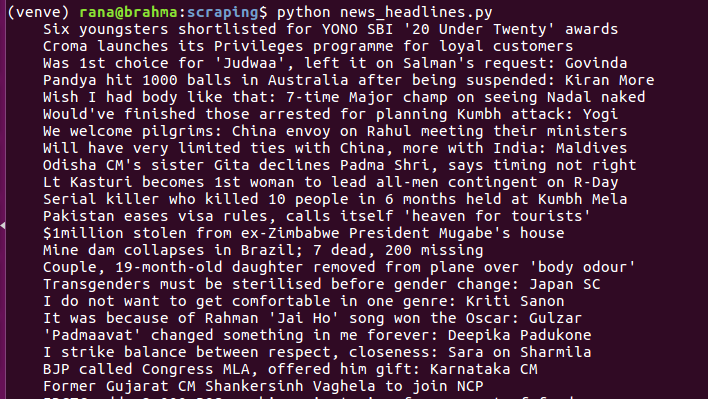

Save this code in a file with name, lets say news_headlines.py. Activate the virtual environment and run the script using command python news_headlines.py. Script will print the headlines shown on first page on terminal.

Code written so far will print headlines shown on first page only. What if we want to fetch more headlines than that.

Fetching more headlines:

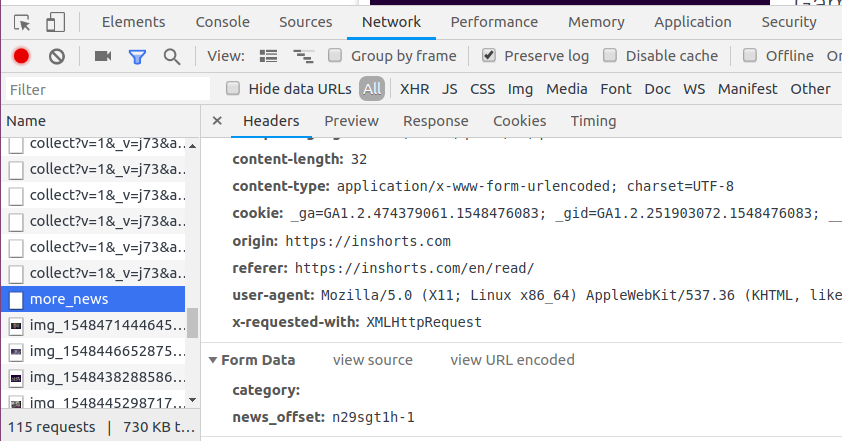

On the news website's homepage, you will see a load more button at the bottom. Open the devtool on chrome by pressing F12 and click on network tab. Here you can see all requests and responses.

When you click the Load More button, a request is sent to the server with 2 key values in form data which you can see in screenshot below.

Value of news_offset variable can be found from the source code of homepage. Open the source code of homepage and search for text min_news_id. Use value of this variable in news_offset.

Post request with form data:

URL used to load more news headlines is https://inshorts.com/en/ajax/more_news. Lets send the post request to this URL with required form data to fetch more headlines. We will send post requests inside a while loop until we keep getting 200 OK status.

url = 'https://inshorts.com/en/ajax/more_news'

news_offset = "apwuhnrm-1"

while True:

response = requests.post(url, data={"category": "", "news_offset": news_offset})

if response.status_code != 200:

print(response.status_code)

break

response_json = json.loads(response.text)

print_headlines(response_json["html"])

news_offset = response_json["min_news_id"]

Since the response returned is JSON string with two keys, min_news_id and html, we will parse the response into json object and get values of these two keys. min_news_id will be used to send next post request and html text will be used to get headlines by passing this text to the print_headlines function we defined earlier.

Complete Code:

Complete python code to get news headlines is also available on Github.

import requests

from bs4 import BeautifulSoup

import json

def print_headlines(response_text):

soup = BeautifulSoup(response_text, 'lxml')

headlines = soup.find_all(attrs={"itemprop": "headline"})

for headline in headlines:

print(headline.text)

def get_headers():

return {

"accept": "*/*",

"accept-encoding": "gzip, deflate, br",

"accept-language": "en-IN,en-US;q=0.9,en;q=0.8",

"content-type": "application/x-www-form-urlencoded; charset=UTF-8",

"cookie": "_ga=GA1.2.474379061.1548476083; _gid=GA1.2.251903072.1548476083; __gads=ID=17fd29a6d34048fc:T=1548476085:S=ALNI_MaRiLYBFlMfKNMAtiW0J3b_o0XGxw",

"origin": "https://inshorts.com",

"referer": "https://inshorts.com/en/read/",

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36",

"x-requested-with": "XMLHttpRequest"

}

url = 'https://inshorts.com/en/read'

response = requests.get(url)

print_headlines(response.text)

# get more news

url = 'https://inshorts.com/en/ajax/more_news'

news_offset = "apwuhnrm-1"

while True:

response = requests.post(url, data={"category": "", "news_offset": news_offset}, headers=get_headers())

if response.status_code != 200:

print(response.status_code)

break

response_json = json.loads(response.text)

print_headlines(response_json["html"])

news_offset = response_json["min_news_id"]