We learned how we can scrape twitter data using BeautifulSoup. But BeautifulSoup is slow and we need to take care of multiple things.

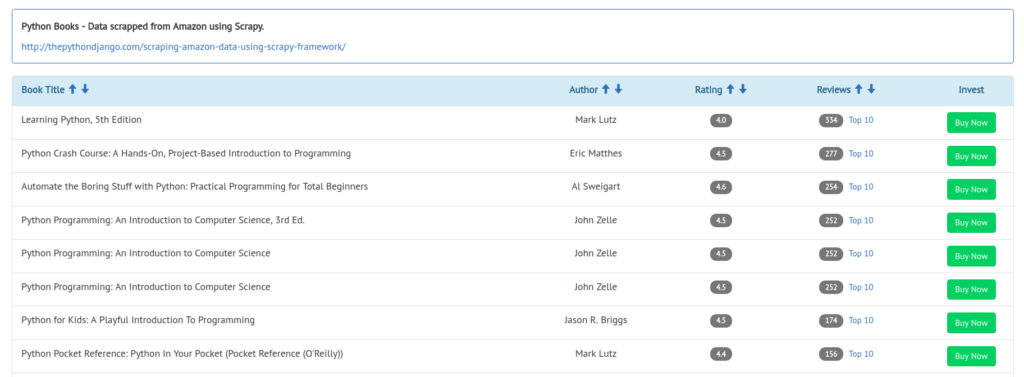

Here we will see how to scrape data from websites using scrapy. I tried scraping Python books details from Amazon.com using scrapy and I found it extremely fast and easy.

We will see how to start working with scrapy, create a scraper, scrape data and save data to Database.

Scraper code is available on Github.

Let's start building a scraper.

Setup:

First create a virtual environment and activate it. Once virtual environment is activated, install the below listed dependencies in it.asn1crypto==0.24.0 attrs==17.4.0 Automat==0.6.0 certifi==2018.1.18 cffi==1.11.4 chardet==3.0.4 constantly==15.1.0 cryptography==2.1.4 cssselect==1.0.3 hyperlink==17.3.1 idna==2.6 incremental==17.5.0 lxml==4.1.1 mysqlclient==1.3.10 parsel==1.3.1 pkg-resources==0.0.0 pyasn1==0.4.2 pyasn1-modules==0.2.1 pycparser==2.18 PyDispatcher==2.0.5 pyOpenSSL==17.5.0 queuelib==1.4.2 requests==2.18.4 Scrapy==1.5.0 service-identity==17.0.0 six==1.11.0 Twisted==17.9.0 urllib3==1.22 w3lib==1.19.0 zope.interface==4.4.3

Now create a scrapy project.

scrapy startproject amazonscrap

A new folder with below structure will be created.

. --- amazonscrap | --- __init__.py | --- items.py | --- middlewares.py | --- pipelines.py | --- settings.py | --- spiders | --- __init__.py --- scrapy.cfg

Writing spider:

Spider are the classes which are written by us and scrapy uses those classes to get data from websites.

Inside spiders folder, create a spider class BooksSpider and start writing your code in it.

Define the name of the spider. Create a list of starting URLs and Generate parse method.

We will also maintain a list of books already scrapped to avoid duplicate requests, although scrapy can take care of this itself.

class BooksSpider(scrapy.Spider):

name = "book-scraper"

start_urls = [

'https://www.amazon.com/Python-Crash-Course-Hands-Project-Based/product-reviews/1593276036/',

]

books_already_scrapped = list()

def parse(self, response):

Since we will be fetching the top 10 comments as well, we are starting with product review URL. After details of one book is scraped, we fetch the other related books on same page and then scrape data for those books. But before we see the code in parse method which parse data from page, we should know what is an Item class.

Scrapy Item:

One good thing about scrapy is that it help in structuring the data. We can define our Item class initems.py file. This will work as a container for our data.

import scrapy

class AmazonscrapItem(scrapy.Item):

book_id = scrapy.Field()

title = scrapy.Field()

author = scrapy.Field()

rating = scrapy.Field()

review_count = scrapy.Field()

reviews = scrapy.Field()

Now let's go back to the parse method.

Parsing the response:

Parse method accept the response as parameter. We will usecss or xpath selectors to fetch the data from response. We will be fetching book title, author's name, rating, review count and book Id.

def parse(self, response):

book = AmazonscrapItem()

book["title"] = response.css('a[data-hook="product-link"]::text').extract_first()

book["title"] = self.escape(book["title"])

rating = response.css('i[data-hook="average-star-rating"]')

rating = rating.css('span[class="a-icon-alt"]::text').extract_first()

book["rating"] = float(rating.replace(" out of 5 stars",""))

review_count = response.css('span[data-hook="total-review-count"]::text').extract_first()

review_count = review_count.replace(",","")

book["review_count"] = int(review_count)

book["author"] = response.css('a[class="a-size-base a-link-normal"]::text').extract_first()

book["book_id"] = self.get_book_id(response)

reviews = self.get_reviews(response)

book["reviews"] = reviews

Now since we are interested only in Python books, we will try to filter other books out. For this I have created a simple utility function is_python_book , which checks if there is python or Django or flask word in either title or comments.

@staticmethod

def is_python_book(item):

keywords = ("python", "django", "flask",)

if any(x in item["title"].lower() for x in keywords):

return True

review_subjects = [x["subject"] for x in item["reviews"]]

if any(x in review_subjects for x in keywords):

return True

review_comments = [x["review_body"] for x in item["reviews"]]

if any(x in review_comments for x in keywords):

return True

return False

Returning scraped item:

Once a book's data is scraped along with review comments, we set that in Item and yield it. What happens to the yielded data, is explained in next paragraph. So we make sure that scrapped data is of python book? if yes we return the data for further processing else data is lost.if self.is_python_book(book):

yield book

Generating next request:

Once first page is processed, we need to generate the next URL and generate a new request to parse second URL. This process goes on until manually terminated or some condition in code is satisfied.self.books_already_scrapped.append(book["book_id"])

more_book_ids = self.get_more_books(response)

for book_id in more_book_ids:

if book_id not in self.books_already_scrapped:

next_url = self.base_url + book_id + "/"

yield scrapy.Request(next_url, callback=self.parse)

Storing scrapped data in Database:

When we yielded processed Item, it is sent for further processing in Item Pipeline. We need to define our pipeline class inpipelines.py file. This class is responsible for further processing of data, be it cleaning the data, storing in DB or string in text files.

class AmazonscrapPipeline(object):

conn = None

cur = None

def process_item(self, item, spider):

# save book

sql = "insert into books (book_id, title, author, rating, review_count) " \

"VALUES ('%s', '%s', '%s', '%s', '%s' )" % \

(item["book_id"], item["title"], item["author"], item["rating"], item["review_count"])

try:

self.cur.execute(sql)

self.conn.commit()

except Exception as e:

self.conn.rollback()

print(repr(e))

We can write connection creation and closing part in pipeline's methods, open_spider and close_spider .

def open_spider(self, spider):

self.conn = MySQLdb.connect(host="localhost", # your host

user="root", # username

passwd="root", # password

db="anuragrana_db") # name of the database

# Create a Cursor object to execute queries.

self.cur = self.conn.cursor()

def close_spider(self, spider):

self.cur.close()

self.conn.close()

Points to Remember:

- Be polite on sites you are scraping. Do not send too many concurrent requests.

- Respect robot.txt file.

- If API is available use it instead of scraping data.

Settings:

Spider wide settings are defined in settings.py file.- Make sure obeying Robot.txt file is set to True.

# Obey robots.txt rules ROBOTSTXT_OBEY = True

- You should add some delay between requests and limit the concurrent requests.

DOWNLOAD_DELAY = 5 # The download delay setting will honor only one of: CONCURRENT_REQUESTS_PER_DOMAIN = 1

- To process the item in pipeline, enable the pipeline.

# Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'amazonscrap.pipelines.AmazonscrapPipeline': 300,

}

- If you are testing the code and need to hit the same page frequently, better enable cache. It will speed up the process for you and will be good for website as well.

# Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings HTTPCACHE_ENABLED = True

Avoiding 503 error:

You may encounter 503 response status code in some requests. This is because scraper send the default value of user-agent header. Update the user-agent value in settings file to something more common.# Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'amazonscrap (+http://www.yourdomain.com)' USER_AGENT = "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/27.0.1453.93 Safari/537.36"

Feel free to download the code from Github and experiment with it. Try to scrape data for books of another genre.

Read more : https://doc.scrapy.org/en/latest/intro/tutorial.html