I recently tried scraping the tweets quickly using Celery RabbitMQ Docker cluster.

Since I was hitting same servers I was using rotating proxies via Tor network. Turned out it is not very fast and using rotating proxy via Tor is not a nice thing to do. I was able to scrape approx 10000 tweets in 60 seconds i.e. 166 tweets per second. Not an impressive number. (But I was able to make Celery, RabbitMQ, rotating proxy via Tor network and Postgres, work in docker cluster.)

Above approach was not very fast, hence I tried to compare below three approaches to send multiple request and parse the response.

- Celery-RabbitMQ docker cluster

- Multi-Threading

- Scrapy framework

I planned to send requests to 1 million websites, but once I started, I figured out that it will take one whole day to finish this hence I settled for 1000 URLs.

Celery RabbitMQ docker cluster:

I started with Celery-RabbitMQ docker cluster. I removed Tor out of docker cluster because now one request will be sent to each 1000 different sites which is perfectly fine.Please download the code from Github Repo and go through README file to start the docker cluster.

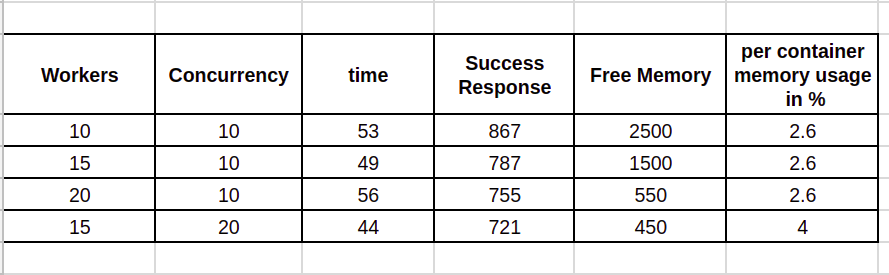

I first tried with 10 workers and set concurrency in worker to 10. It took 53 seconds to send 1000 requests and get the response.

Increased the worker count to 15 and then to 20. Below is the result. Time is in seconds and Free memory is in MBs.

Every container takes some memory hence I tracked the memory usage as each time workers count was increased.

As you can see 15 workers, each with 20 concurrency took the minimum time in sending 1000 requests out of which 721 were returned with HTTP status 200. But this also used the maximum memory.

We could achiever better performance if more memory is available. I ran this on machine with 8 GB RAM. As we increased the concurrency/workers, lesser HTTP 200 status were received.

Multi-Threaded Approach:

I used the simplest form of Multi-Threaded approach to send multiple requests at once.Code is available in multithreaded.py file.

Create a virtual environment and install dependencies. Run the code and measure the time.

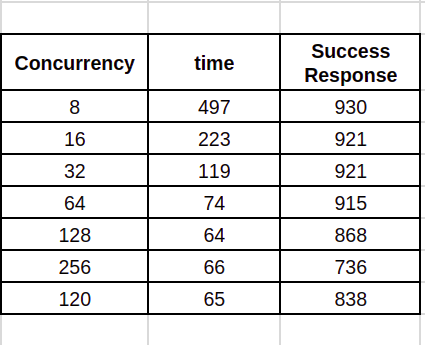

I started with 8 threads, then 16 and went upto 256 threads.

Since memory usage wasn't an issue this time, I didn't tracked the same.

Here are the results.

As you can see we received best performance with 128 threads.

Scrapy Framework:

For this I created a scrapy project wherestart_urls variable was generated from the link from text file.

Once scrapy project is created, Create a spider in it.

spiders/myspider.py

import scrapy

from scrapy import signals

# to run : scrapy crawl my-scraper

class MySpider(scrapy.Spider):

name = "my-scraper"

start_urls = [

]

status_timeouts = 0

status_success = 0

status_others = 0

status_exception = 0

def parse(self, response):

print(response.status)

if response.status == 200:

self.status_success += 1

else:

self.status_others += 1

print(self.status_success, self.status_others)

def spider_opened(self):

links = list()

with open("one_thousand_websites.txt", "r") as f:

links = f.readlines()

self.start_urls = [link.strip() for link in links]

@classmethod

def from_crawler(cls, crawler, *args, **kwargs):

spider = super(MySpider, cls).from_crawler(crawler, *args, **kwargs)

crawler.signals.connect(spider.spider_opened, signal=signals.spider_opened)

return spider

robots.txt file. Concurrent requests value was default i.e. 16.

Experience with scrapy was the worst. There were lots of redirection from http to https and then it will read robots.txt file and then will fetch the response.

It ran for 1500 seconds and only 795 urls were processed so far when I aborted the process.

Now I changed the default settings.

# Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36" # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) CONCURRENT_REQUESTS = 64

Couldn't bind: 24: Too many open files error.

Updated the number of maximum files that can be opened at a time from 1024 to 10000 using command ulimit -n 10000.

This didn't solved the problem.

Reduced the max concurrency to 64 but this error keep coming after processing approx 500 urls. Later on scrapy started throwing DNS lookup failed: no results for hostname lookup error as well.

Now I didn't bothered to debug these issues and gave up.

Conclusion:

If you need to send multiple requests quickly then docker cluster is the best option provided you have enough memory on your disposal. This will take more time as compared to multi-threaded approach to setup and start the process.If you have limited memory then you may compromise a bit on speed and use multithreaded approach. This is also easy to setup.

Scrapy is not that good or may be I was not able to make it useful for me.

Please comment if you have any better approach or if you think I missed something while trying these approaches.